二进制方式安装k8s集群 |

您所在的位置:网站首页 › let nr 网络 › 二进制方式安装k8s集群 |

二进制方式安装k8s集群

|

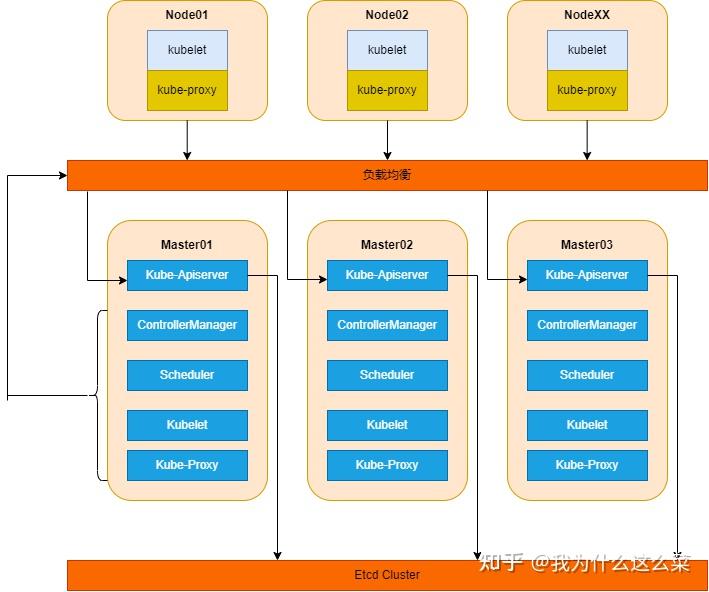

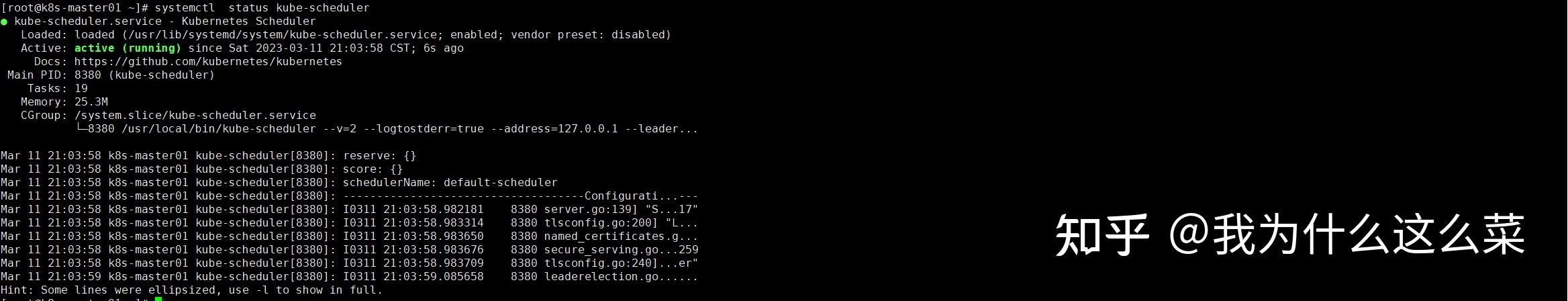

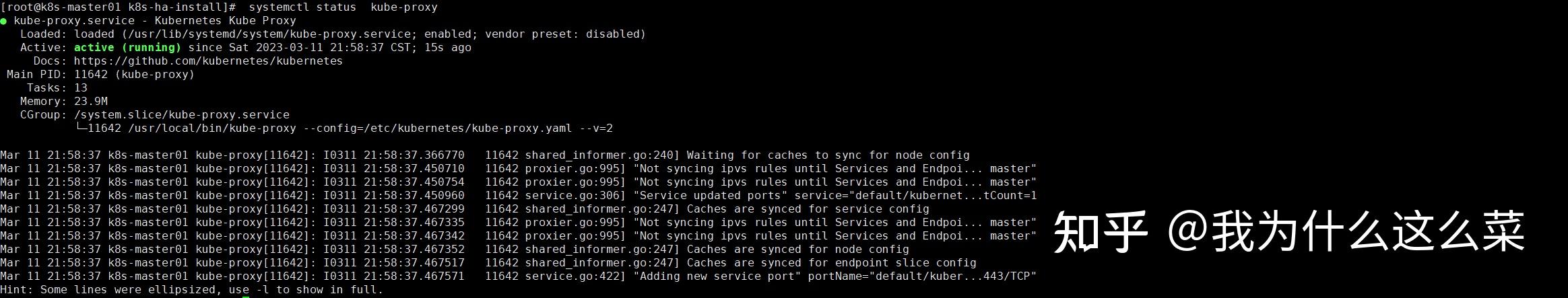

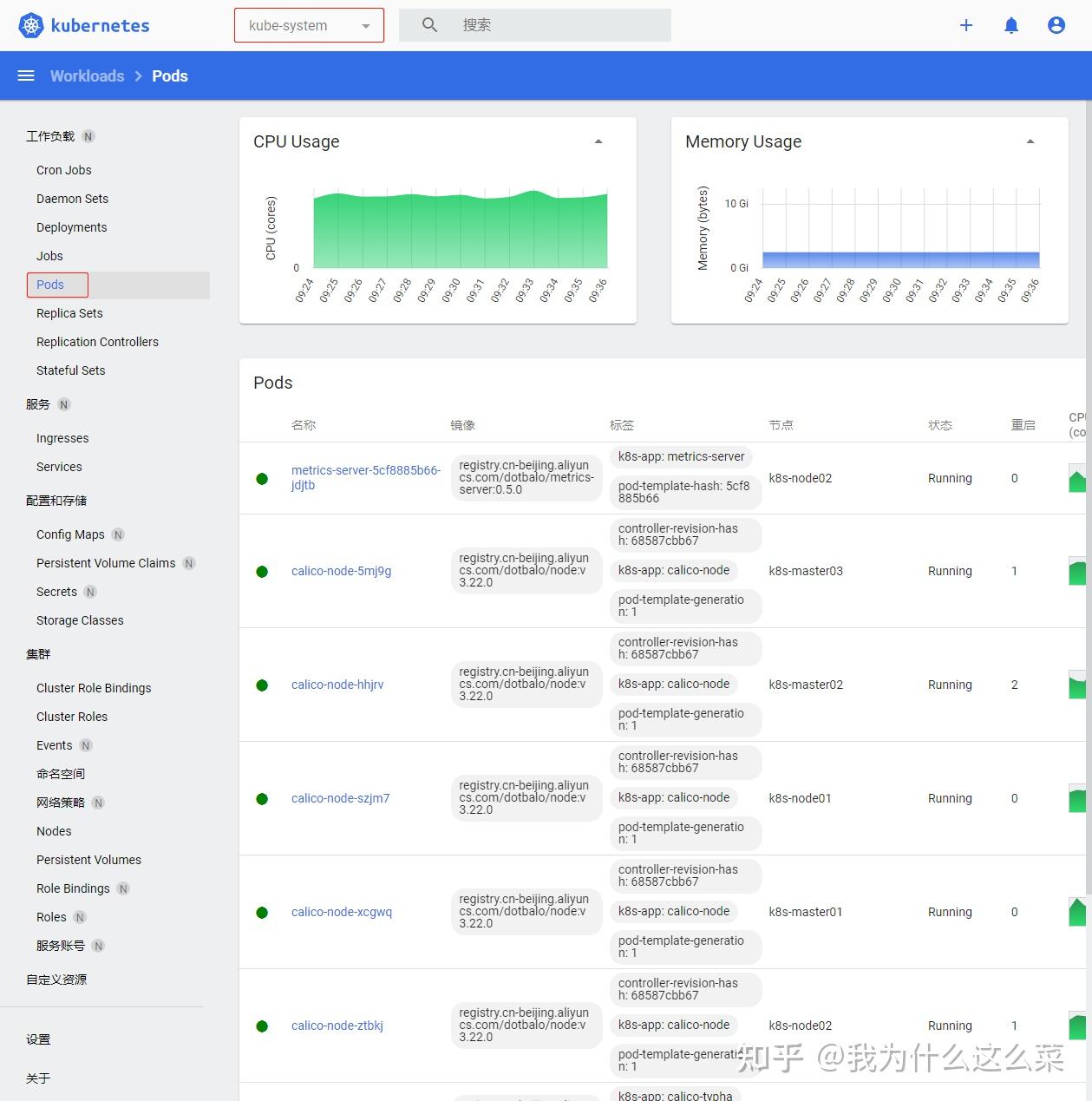

O、致谢 感谢宽哥的介绍和分享,让我逐渐入门k8s,帮助我打开了云原生领域的大门,著有《再也不踩坑的kubernetes实战指南》、《云原生Kubernetes全栈架构师实战》。如果大家有兴趣的话,可以多多了解一下宽哥。 感谢k8s官网资料的无私分享。 一、前言本文主要介绍一下二进制方式安装k8s集群。同时在此之前浅谈一下k8s高可用架构,介绍一下各组件作用。 声明:以下过程都是本人自己亲自部署验证! 二、k8s高可用架构2.1 k8s高可用架构图 k8s高可用架构图 k8s高可用架构图k8s分为Master节点和Node节点,Master节点是控制节点,Node节点是工作节点。其中,Master节点包含kube-apiserver、kube-scheduler、kube-controller-manager、etcd、kubelet、kube-proxy;Node节点包含kubelet、kube-proxy。 关于Master节点和Node节点介绍如下: 1.Master节点-控制节点 指容器编排层,它暴露 API 和接口来定义、 部署容器和管理容器的生命周期。管理集群中的工作节点和 Pod。在生产环境中,控制平面通常跨多台计算机运行, 一个集群通常运行多个节点,提供容错性和高可用性。 2.Node节点-工作节点 托管 Pod,而 Pod 就是作为应用负载的组件,是可以在 Kubernetes 中创建和管理的、最小的可部署的计算单元。每个集群至少有一个工作节点。 下面根据Kubernetes 组件进行介绍: 1.Master组件 (1)kube-apiserver API 服务器是 Kubernetes 控制平面的组件, 该组件负责公开了 Kubernetes API,负责处理接受请求的工作。 API 服务器是 Kubernetes 控制平面的前端。 Kubernetes API 服务器的主要实现是 kube-apiserver。 kube-apiserver 设计上考虑了水平扩缩,也就是说,它可通过部署多个实例来进行扩缩。 你可以运行 kube-apiserver 的多个实例,并在这些实例之间平衡流量。 (2)etcd 一致且高可用的键值存储,用作 Kubernetes 所有集群数据的后台数据库。一般可以安装在Master节点上,如果资源充足,也可安装到单独的服务器上。 (3)kube-scheduler kube-scheduler 是控制平面的组件, 负责监视新创建的、未指定运行节点node的 Pods, 并选择节点来让 Pod 在上面运行。 调度决策考虑的因素包括单个 Pod 及 Pods 集合的资源需求、软硬件及策略约束、 亲和性及反亲和性规范、数据位置、工作负载间的干扰及最后时限。 (4)kube-controller-manager kube-controller-manager 是控制平面的组件, 负责运行控制器进程。 从逻辑上讲, 每个控制器都是一个单独的进程, 但是为了降低复杂性,它们都被编译到同一个可执行文件,并在同一个进程中运行。 这些控制器包括: 节点控制器(Node Controller):负责在节点出现故障时进行通知和响应任务控制器(Job Controller):监测代表一次性任务的 Job 对象,然后创建 Pods 来运行这些任务直至完成端点分片控制器(EndpointSlice controller):填充端点分片(EndpointSlice)对象(以提供 Service 和 Pod 之间的链接)。服务账号控制器(ServiceAccount controller):为新的命名空间创建默认的服务账号(ServiceAccount)(5)kubelet kubelet 会在集群中每个节点(node)上运行。 它保证容器containers都运行在 Pod 中。这里之所以放在Master节点组件里面,客观上说Master也属于node。 kubelet 接收一组通过各类机制提供给它的 PodSpecs, 确保这些 PodSpecs 中描述的容器处于运行状态且健康。 kubelet 不会管理不是由 Kubernetes 创建的容器。 (6)kube-proxy kube-proxy是集群中每个节点(node)上所运行的网络代理, 实现 Kubernetes 服务(Service)概念的一部分。 kube-proxy 维护节点上的一些网络规则, 这些网络规则会允许从集群内部或外部的网络会话与 Pod 进行网络通信。 如果操作系统提供了可用的数据包过滤层,则 kube-proxy 会通过它来实现网络规则。 否则,kube-proxy 仅做流量转发。 2.Node组件 (1)kubelet kubelet 会在集群中每个节点(node)上运行。 它保证容器containers都运行在 Pod 中。这里之所以放在Master节点组件里面,客观上说Master也属于node。 kubelet 接收一组通过各类机制提供给它的 PodSpecs, 确保这些 PodSpecs 中描述的容器处于运行状态且健康。 kubelet 不会管理不是由 Kubernetes 创建的容器。 (2)kube-proxy kube-proxy是集群中每个节点(node)上所运行的网络代理, 实现 Kubernetes 服务(Service)概念的一部分。 kube-proxy 维护节点上的一些网络规则, 这些网络规则会允许从集群内部或外部的网络会话与 Pod 进行网络通信。 如果操作系统提供了可用的数据包过滤层,则 kube-proxy 会通过它来实现网络规则。 否则,kube-proxy 仅做流量转发。 2.2 k8s高可用架构分析这里负载均衡采用KeepAlived和HAProxy实现高可用,当然了,你也可以使用公有云的负载均衡和F5实现高可用。当采用KeepAlived和HAProxy实现高可用时,会虚拟出一个VIP,用于组件之间的交互。 当kube-scheduler、kube-controller-manager、kubelet、kube-proxy组件想访问kube-apiserver时,必须要经过负载均衡再进行访问kube-apiserver,从而实现了高可用。 针对etcd组件,只有kube-apiserver组件才能与其进行交互,kube-scheduler、kube-controller-manager、kubelet、kube-proxy组件不能直接与etcd组件进行交互。 三、k8s集群安装方式对比一般我们可以使用两种方式来安装k8s集群:Kubeadm方式和二进制方式。 关于二者区别说明如下: 1.安装方式不同:使用 kubeadm 创建的 Kubernetes 集群是使用预先打包好的二进制文件安装的,而使用二进制安装则需要手动下载和安装二进制文件。 2.部署步骤不同:使用 kubeadm 部署 Kubernetes 集群可以更加自动化,且容易维护,而使用二进制安装则需要手动配置一些组件,需要更多的部署步骤。 3.可维护性不同:使用 kubeadm 部署的 Kubernetes 集群可以使用 kubeadm 工具进行维护,如升级和添加节点,更加方便,而使用二进制安装则需要手动管理和维护。 4.社区支持不同:kubeadm 是 Kubernetes 的官方工具,有更好的社区支持,可以获得更多的文档和帮助,而使用二进制安装则需要更多的自学和实践。 四、二进制高可用安装k8s集群4.1 安装及优化4.1.1 基本环境配置1.环境介绍 (1)高可用Kubernetes集群规划 主机名IP地址说明k8s-master01192.168.1.31/24master节点k8s-master02192.168.1.32/24master节点k8s-master03192.168.1.33/24master节点k8s-node01192.168.1.34/24node节点k8s-node02192.168.1.35/24node节点k8s-master-vip192.168.1.38/24keepalived虚拟IP(2)网段规划 网段名称网段划分宿主机网段192.168.1.0/24Pod网段172.16.0.0/12Service网段10.0.0.0/16(3)虚拟机规划 采用三Master两Node,4核4G+磁盘40G+40G 2.配置信息 配置信息备注系统版本Centos7.9Docker版本20.10xKubeadm版本v1.23.17$ cat /etc/redhat-release CentOS Linux release 7.9.2009 (Core) $ docker --version Docker version 20.10.21, build baeda1f $ kubeadm version kubeadm version: &version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:33:14Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}注意事项:宿主机网段、K8s Service网段、Pod网段不能重复!!! 3.修改主机名 (1)根据规划信息在每台机器上修改主机名 $ hostnamectl set-hostname k8s-master01 $ hostnamectl set-hostname k8s-master02 $ hostnamectl set-hostname k8s-master03 $ hostnamectl set-hostname k8s-node01 $ hostnamectl set-hostname k8s-node024.修改host文件 (1)每台机器安装vim工具,如果安装过请忽略 $ yum install vim -y(2)每台机器上修改hosts文件 $ vim /etc/hosts 192.168.1.31 k8s-master01 192.168.1.32 k8s-master02 192.168.1.33 k8s-master03 192.168.1.38 k8s-master-vip 192.168.1.34 k8s-node01 192.168.1.35 k8s-node02注意事项:如果不是高可用集群,上面VIP为Master01的IP!!! 5.安装yum源 (1)在每台机器上执行以下命令配置默认yum源并安装依赖 $ curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo $ yum install -y yum-utils device-mapper-persistent-data lvm2(2)在每台机器上执行以下命令配置Docker的yum源 $ yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo(3)在每台机器上执行以下命令配置kubernetes的yum源 $ cat /etc/timezone $ ntpdate time2.aliyun.com #添加定时任务 $ crontab -e */5 * * * * /usr/sbin/ntpdate time2.aliyun.com9.配置limit (1)在每台机器上执行以下命令配置limit $ ulimit -SHn 65535 $ vim /etc/security/limits.conf # 末尾添加以下内容 * soft nofile 65536 * hard nofile 131072 * soft nproc 65535 * hard nproc 655350 * soft memlock unlimited * hard memlock unlimited10.Master01节点配置免密钥登录 (1)在Master01节点上配置如下命令,使其免密钥登录其他节点 $ ssh-keygen -t rsa #按3次回车即可 $ for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done注意:此操作结束后会提示输入4次其他节点的密码!!! (2)在Master01节点上远程登录k8s-node02节点进行测试,发现测试成功 $ ssh k8s-node0211.下载源码文件 (1)在Master01节点上下载源码文件 $ git clone https://gitee.com/jeckjohn/k8s-ha-install.git(2)在Master01节点上执行以下命令查看分支 $ cd k8s-ha-install $ git branch -a * master remotes/origin/HEAD -> origin/master remotes/origin/manual-installation-v1.16.x remotes/origin/manual-installation-v1.17.x remotes/origin/manual-installation-v1.18.x remotes/origin/manual-installation-v1.19.x remotes/origin/manual-installation-v1.20.x remotes/origin/manual-installation-v1.20.x-csi-hostpath remotes/origin/manual-installation-v1.21.x remotes/origin/manual-installation-v1.22.x remotes/origin/manual-installation-v1.23.x remotes/origin/manual-installation-v1.24.x remotes/origin/manual-installation-v1.25.x remotes/origin/master12.升级系统 (1)在每台机器上执行以下命令升级系统 $ yum update -y --exclude=kernel*4.1.2 内核配置Centos7默认内核为3.10 $ uname -a Linux k8s-master01 3.10.0-1160.el7.x86_64 #1 SMP Mon Oct 19 16:18:59 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux1.内核升级 (1)在Master01节点下载内核 $ cd /root $ wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $ wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm(2)从master01节点传到其他节点 $ for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done(3)在每台机器上执行以下命令安装内核 $ cd /root && yum localinstall -y kernel-ml*(4)在每台机器上执行以下命令更改内核启动顺序 $ grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg $ grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"(5)在每台机器上执行以下命令检查默认内核是不是4.19 $ grubby --default-kernel /boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64(6)在每台机器上执行以下命令重启并检查默认内核是不是4.19 $ reboot $ uname -a Linux k8s-master02 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linux2.配置ipvs模块 (1)在每台机器上执行以下命令安装ipvsadm $ yum install ipvsadm ipset sysstat conntrack libseccomp -y(2)在每台机器上执行以下命令配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack, 4.18以下使用nf_conntrack_ipv4即可 $ modprobe -- ip_vs $ modprobe -- ip_vs_rr $ modprobe -- ip_vs_wrr $ modprobe -- ip_vs_sh $ modprobe -- nf_conntrack(3)在每台机器上修改/etc/modules-load.d/ipvs.conf文件,在文件末尾添加以下内容 $ vim /etc/modules-load.d/ipvs.conf ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp ip_vs_sh nf_conntrack ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT ipip(4)在每台机器上设置开机自启 $ systemctl enable --now systemd-modules-load.service(5)在每台机器上执行以下命令开启一些k8s集群中必须的内核参数,所有节点配置k8s内核 ``` $ cat 验证4.2 基本组件安装4.2.1 Containerd作为Runtime如果安装的版本低于1.24,选择Docker和Containerd均可,高于1.24选择Containerd作为Runtime。 1.在每台机器上执行以下命令安装docker-ce-20.10,注意这里安装docker时会把Containerd也装上 $ yum install docker-ce-20.10.* docker-ce-cli-20.10.* -y2.在每台机器上执行以下命令配置Containerd所需的模块 $ cat sandbox_image的Pause镜像修改9.在每台机器上执行以下命令启动Containerd,并配置开机自启动 $ systemctl daemon-reload $ systemctl enable --now containerd $ ls /run/containerd/containerd.sock /run/containerd/containerd.sock10.在每台机器上执行以下命令配置crictl客户端连接的运行时位置 $ cat > /etc/crictl.yaml 查看etcd状态4.5 高可用组件安装公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。 注意:如果不是高可用集群,haproxy和keepalived无需安装!!! 1.安装HAProxy (1)所有Master节点通过yum安装HAProxy和KeepAlived $ yum install keepalived haproxy -y(2)所有Master节点配置HAProxy,所有Master节点的HAProxy配置相同 $ mkdir /etc/haproxy $ > /etc/haproxy/haproxy.cfg $ vim /etc/haproxy/haproxy.cfg global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend k8s-master bind 0.0.0.0:8443 bind 127.0.0.1:8443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server k8s-master01 192.168.1.31:6443 check server k8s-master02 192.168.1.32:6443 check server k8s-master03 192.168.1.33:6443 check2.安装KeepAlived 所有Master节点配置KeepAlived,配置不一样,注意区分每个节点的IP和网卡(interface参数) (1)Master01节点的配置如下 $ mkdir /etc/keepalived $ > /etc/keepalived/keepalived.conf $ vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state MASTER interface ens33 mcast_src_ip 192.168.1.31 virtual_router_id 51 priority 101 nopreempt advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.1.38 } track_script { chk_apiserver } }(2)Master02节点的配置如下 $ mkdir /etc/keepalived $ > /etc/keepalived/keepalived.conf $ vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface ens33 mcast_src_ip 192.168.1.32 virtual_router_id 51 priority 100 nopreempt advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.1.38 } track_script { chk_apiserver } }(3)Master03节点的配置如下 $ mkdir /etc/keepalived $ > /etc/keepalived/keepalived.conf $ vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" interval 5 weight -5 fall 2 rise 1 } vrrp_instance VI_1 { state BACKUP interface ens33 mcast_src_ip 192.168.1.33 virtual_router_id 51 priority 100 nopreempt advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 192.168.1.38 } track_script { chk_apiserver } }(4)所有master节点配置KeepAlived健康检查文件 $ vim /etc/keepalived/check_apiserver.sh #!/bin/bash #初始化错误计数器 err=0 #循环三次检查HAProxy进程是否在运行 for k in $(seq 1 3) do check_code=$(pgrep haproxy) if [[ $check_code == "" ]]; then #如果未找到进程,增加错误计数器并等待一秒钟 err=$(expr $err + 1) sleep 1 continue else #如果找到进程,重置错误计数器并退出循环 err=0 break fi done #根据错误计数器的值,决定是否停止keepalived服务并退出脚本 if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi #赋权 $ chmod +x /etc/keepalived/check_apiserver.sh3.所有master节点启动haproxy和keepalived $ systemctl daemon-reload $ systemctl enable --now haproxy $ systemctl enable --now keepalived4.测试VIP,验证keepalived是否是正常 $ ping 192.168.1.38 -c 44.6 k8s组件配置所有节点创建相关目录 $ mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes4.6.1 Apiserver1.Master01节点的配置如下 $ vim /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-apiserver \ --v=2 \ --logtostderr=true \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=6443 \ --insecure-port=0 \ --advertise-address=192.168.1.31 \ --service-cluster-ip-range=10.0.0.0/16 \ --service-node-port-range=30000-32767 \ --etcd-servers=https://192.168.1.31:2379,https://192.168.1.32:2379,https://192.168.1.33:2379 \ --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --client-ca-file=/etc/kubernetes/pki/ca.pem \ --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \ --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \ --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \ --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/pki/sa.pub \ --service-account-signing-key-file=/etc/kubernetes/pki/sa.key \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \ --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \ --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User # --token-auth-file=/etc/kubernetes/token.csv Restart=on-failure RestartSec=10s LimitNOFILE=65535 [Install] WantedBy=multi-user.target2.Master02节点的配置如下 $ vim /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-apiserver \ --v=2 \ --logtostderr=true \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=6443 \ --insecure-port=0 \ --advertise-address=192.168.1.32 \ --service-cluster-ip-range=10.0.0.0/16 \ --service-node-port-range=30000-32767 \ --etcd-servers=https://192.168.1.31:2379,https://192.168.1.32:2379,https://192.168.1.33:2379 \ --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --client-ca-file=/etc/kubernetes/pki/ca.pem \ --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \ --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \ --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \ --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/pki/sa.pub \ --service-account-signing-key-file=/etc/kubernetes/pki/sa.key \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \ --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \ --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User # --token-auth-file=/etc/kubernetes/token.csv Restart=on-failure RestartSec=10s LimitNOFILE=65535 [Install] WantedBy=multi-user.target3.Master03节点的配置如下 $ vim /usr/lib/systemd/system/kube-apiserver.service [Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-apiserver \ --v=2 \ --logtostderr=true \ --allow-privileged=true \ --bind-address=0.0.0.0 \ --secure-port=6443 \ --insecure-port=0 \ --advertise-address=192.168.1.33 \ --service-cluster-ip-range=10.0.0.0/16 \ --service-node-port-range=30000-32767 \ --etcd-servers=https://192.168.1.31:2379,https://192.168.1.32:2379,https://192.168.1.33:2379 \ --etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \ --etcd-certfile=/etc/etcd/ssl/etcd.pem \ --etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \ --client-ca-file=/etc/kubernetes/pki/ca.pem \ --tls-cert-file=/etc/kubernetes/pki/apiserver.pem \ --tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \ --kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \ --kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \ --service-account-key-file=/etc/kubernetes/pki/sa.pub \ --service-account-signing-key-file=/etc/kubernetes/pki/sa.key \ --service-account-issuer=https://kubernetes.default.svc.cluster.local \ --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true \ --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \ --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \ --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \ --requestheader-allowed-names=aggregator \ --requestheader-group-headers=X-Remote-Group \ --requestheader-extra-headers-prefix=X-Remote-Extra- \ --requestheader-username-headers=X-Remote-User # --token-auth-file=/etc/kubernetes/token.csv Restart=on-failure RestartSec=10s LimitNOFILE=65535 [Install] WantedBy=multi-user.target4.所有Master节点开启kube-apiserver并检测kube-server状态 $ systemctl daemon-reload && systemctl enable --now kube-apiserver $ systemctl status kube-apiserver 查看kube-apiserver状态4.6.2 ControllerManager 查看kube-apiserver状态4.6.2 ControllerManager1.所有Master节点配置kube-controller-manager service $ vim /usr/lib/systemd/system/kube-controller-manager.service [Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-controller-manager \ --v=2 \ --logtostderr=true \ --address=127.0.0.1 \ --root-ca-file=/etc/kubernetes/pki/ca.pem \ --cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \ --cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \ --service-account-private-key-file=/etc/kubernetes/pki/sa.key \ --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \ --leader-elect=true \ --use-service-account-credentials=true \ --node-monitor-grace-period=40s \ --node-monitor-period=5s \ --pod-eviction-timeout=2m0s \ --controllers=*,bootstrapsigner,tokencleaner \ --allocate-node-cidrs=true \ --cluster-cidr=172.16.0.0/12 \ --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \ --node-cidr-mask-size=24 Restart=always RestartSec=10s [Install] WantedBy=multi-user.target2.所有Master节点启动kube-controller-manager $ systemctl daemon-reload $ systemctl enable --now kube-controller-manager $ systemctl enable --now kube-controller-manager3.所有Master节点查看ControllerManager启动状态 $ systemctl status kube-controller-manager 查看ControllerManager启动状态4.6.3 Scheduler 查看ControllerManager启动状态4.6.3 Scheduler1.所有Master节点配置kube-scheduler service $ vim /usr/lib/systemd/system/kube-scheduler.service [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-scheduler \ --v=2 \ --logtostderr=true \ --address=127.0.0.1 \ --leader-elect=true \ --kubeconfig=/etc/kubernetes/scheduler.kubeconfig Restart=always RestartSec=10s [Install] WantedBy=multi-user.target2.所有Master节点启动Scheduler $ systemctl daemon-reload $ systemctl enable --now kube-scheduler3.所有Master节点查看Scheduler启动状态 $ systemctl status kube-scheduler 查看Scheduler启动状态4.7 TLS Bootstrapping配置 查看Scheduler启动状态4.7 TLS Bootstrapping配置1.在Master01节点上创建bootstrap $ cd /root/k8s-ha-install/bootstrap $ kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.1.38:8443 --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig $ kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9c.2e4d610cf3e7426e --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig $ kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig $ kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig注意:如果要修改上面c8ad9c.2e4d610cf3e7426e需要同步修改bootstrap.secret.yaml的token-id和token-secret  同步修改 同步修改2.在Master01节点上拷贝文件admin.kubeconfig $ mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config3.在Master01节点上查询集群状态 $ kubectl get cs Warning: v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-2 Healthy {"health":"true","reason":""} etcd-0 Healthy {"health":"true","reason":""} etcd-1 Healthy {"health":"true","reason":""}4.在Master01节点上创建bootstrap $ cd /root/k8s-ha-install/bootstrap $ kubectl create -f bootstrap.secret.yaml4.8 Node节点配置4.8.1 复制证书1.在Master01节点上复制证书至Node节点 $ cd /etc/kubernetes/ $ for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02; do ssh $NODE mkdir -p /etc/kubernetes/pki for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE} done done4.8.2 kubelet配置1.所有节点创建相关目录 $ mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/2.所有节点配置kubelet service $ vim /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/kubernetes/kubernetes [Service] ExecStart=/usr/local/bin/kubelet Restart=always StartLimitInterval=0 RestartSec=10 [Install] WantedBy=multi-user.target3.所有节点配置kubelet service的配置文件 $ vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf [Service] Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig" Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock --cgroup-driver=systemd" Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml" Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' " ExecStart= ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS4.所有节点创建kubelet的配置文件 $ vim /etc/kubernetes/kubelet-conf.yml apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration address: 0.0.0.0 port: 10250 readOnlyPort: 10255 authentication: anonymous: enabled: false webhook: cacheTTL: 2m0s enabled: true x509: clientCAFile: /etc/kubernetes/pki/ca.pem authorization: mode: Webhook webhook: cacheAuthorizedTTL: 5m0s cacheUnauthorizedTTL: 30s cgroupDriver: systemd cgroupsPerQOS: true clusterDNS: - 10.0.0.10 clusterDomain: cluster.local containerLogMaxFiles: 5 containerLogMaxSize: 10Mi contentType: application/vnd.kubernetes.protobuf cpuCFSQuota: true cpuManagerPolicy: none cpuManagerReconcilePeriod: 10s enableControllerAttachDetach: true enableDebuggingHandlers: true enforceNodeAllocatable: - pods eventBurst: 10 eventRecordQPS: 5 evictionHard: imagefs.available: 15% memory.available: 100Mi nodefs.available: 10% nodefs.inodesFree: 5% evictionPressureTransitionPeriod: 5m0s failSwapOn: true fileCheckFrequency: 20s hairpinMode: promiscuous-bridge healthzBindAddress: 127.0.0.1 healthzPort: 10248 httpCheckFrequency: 20s imageGCHighThresholdPercent: 85 imageGCLowThresholdPercent: 80 imageMinimumGCAge: 2m0s iptablesDropBit: 15 iptablesMasqueradeBit: 14 kubeAPIBurst: 10 kubeAPIQPS: 5 makeIPTablesUtilChains: true maxOpenFiles: 1000000 maxPods: 110 nodeStatusUpdateFrequency: 10s oomScoreAdj: -999 podPidsLimit: -1 registryBurst: 10 registryPullQPS: 5 resolvConf: /etc/resolv.conf rotateCertificates: true runtimeRequestTimeout: 2m0s serializeImagePulls: true staticPodPath: /etc/kubernetes/manifests streamingConnectionIdleTimeout: 4h0m0s syncFrequency: 1m0s volumeStatsAggPeriod: 1m0s5.所有节点启动kubelet $ systemctl daemon-reload $ systemctl enable --now kubelet6.所有节点查看集群状态 $ kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 NotReady 1s v1.23.17 k8s-master02 NotReady 1s v1.23.17 k8s-master03 NotReady 1s v1.23.17 k8s-node01 NotReady 1s v1.23.17 k8s-node02 NotReady 1s v1.23.177.所有节点查看系统日志是否有报错信息 $ tail -f /var/log/messages 查看系统日志是否有报错信息 查看系统日志是否有报错信息注意:上面报错日志是因为没安装calico,暂时不用处理! 4.8.3 kube-proxy配置1.在Master01节点上生成kube-proxy.kubeconfig文件 $ cd /root/k8s-ha-install $ kubectl -n kube-system create serviceaccount kube-proxy kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy SECRET=$(kubectl -n kube-system get sa/kube-proxy \ --output=jsonpath='{.secrets[0].name}') JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \ --output=jsonpath='{.data.token}' | base64 -d) PKI_DIR=/etc/kubernetes/pki K8S_DIR=/etc/kubernetes kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.1.38:8443 --kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig2.在Master01节点上将kubeconfig发送至其他节点 (1)在Master01节点上将kubeconfig发送至其他Master节点 for NODE in k8s-master02 k8s-master03; do scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig done(2)在Master01节点上将kubeconfig发送至其他Node节点 for NODE in k8s-node01 k8s-node02; do scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig done3.所有节点添加kube-proxy的配置和service文件 $ vim /usr/lib/systemd/system/kube-proxy.service [Unit] Description=Kubernetes Kube Proxy Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] ExecStart=/usr/local/bin/kube-proxy \ --config=/etc/kubernetes/kube-proxy.yaml \ --v=2 Restart=always RestartSec=10s [Install] WantedBy=multi-user.target $ vim /etc/kubernetes/kube-proxy.yaml apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 clientConnection: acceptContentTypes: "" burst: 10 contentType: application/vnd.kubernetes.protobuf kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig qps: 5 clusterCIDR: 172.16.0.0/12 configSyncPeriod: 15m0s conntrack: max: null maxPerCore: 32768 min: 131072 tcpCloseWaitTimeout: 1h0m0s tcpEstablishedTimeout: 24h0m0s enableProfiling: false healthzBindAddress: 0.0.0.0:10256 hostnameOverride: "" iptables: masqueradeAll: false masqueradeBit: 14 minSyncPeriod: 0s syncPeriod: 30s ipvs: masqueradeAll: true minSyncPeriod: 5s scheduler: "rr" syncPeriod: 30s kind: KubeProxyConfiguration metricsBindAddress: 127.0.0.1:10249 mode: "ipvs" nodePortAddresses: null oomScoreAdj: -999 portRange: "" udpIdleTimeout: 250ms4.所有节点启动kube-proxy $ systemctl daemon-reload $ systemctl enable --now kube-proxy5.所有节点查看kube-proxy $ systemctl status kube-proxy 查看kube-proxy 查看kube-proxy6.在Master01节点上查看SVC $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 443/TCP 74m4.9 安装Calico1.在Master01节点上更改calico的网段 $ cd /root/k8s-ha-install/calico/ $ sed -i "s#POD_CIDR#172.16.0.0/12#g" calico.yaml2.在Master01节点上验证calico的网段是否成功修改 $ grep "IPV4POOL_CIDR" calico.yaml -A 1 - name: CALICO_IPV4POOL_CIDR value: "172.16.0.0/12"3.在Master01节点上安装calico $ kubectl apply -f calico.yaml4.在Master01节点上查看容器状态 $ kubectl get po -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-6f6595874c-lgc47 1/1 Running 0 9m57s calico-node-5pdrk 1/1 Running 0 9m57s calico-node-6zgnd 1/1 Running 0 9m57s calico-node-b4ktd 1/1 Running 0 9m57s calico-node-bkplf 1/1 Running 0 9m57s calico-node-rvnrq 1/1 Running 0 9m57s calico-typha-6b6cf8cbdf-llcms 1/1 Running 0 9m57s4.10 安装CoreDNS1.在Master01节点上将coredns的serviceIP改成k8s service网段的第十个IP $ cd /root/k8s-ha-install/CoreDNS $ COREDNS_SERVICE_IP=`kubectl get svc | grep kubernetes | awk '{print $3}'`0 $ sed -i "s#KUBEDNS_SERVICE_IP#${COREDNS_SERVICE_IP}#g" coredns.yaml2.在Master01节点上安装coredns $ cd /root/k8s-ha-install/CoreDNS $ kubectl create -f coredns.yaml3.在Master01节点上查看容器状态 $ kubectl get po -n kube-system | grep coredns coredns-5db5696c7-9m9s8 1/1 Running 0 88s4.11 安装Metrics Server1.在Master01节点上安装metrics server $ cd /root/k8s-ha-install/metrics-server $ kubectl create -f .2.在Master01节点上查看metrics server状态 $ kubectl get po -n kube-system | grep metrics metrics-server-6bf7dcd649-v7gqt 1/1 Running 0 2m23s4.12 安装DashboardDashboard 是基于网页的 Kubernetes 用户界面。 你可以使用 Dashboard 将容器应用部署到 Kubernetes 集群中,也可以对容器应用排错,还能管理集群资源。 你可以使用 Dashboard 获取运行在集群中的应用的概览信息,也可以创建或者修改 Kubernetes 资源 (如 Deployment,Job,DaemonSet 等等)。 例如,你可以对 Deployment 实现弹性伸缩、发起滚动升级、重启 Pod 或者使用向导创建新的应用。Dashboard 同时展示了 Kubernetes 集群中的资源状态信息和所有报错信息。 1.在Master01节点上安装Dashboard $ cd /root/k8s-ha-install/dashboard/ $ kubectl create -f .2.在Master01节点上查看Dashboard状态 $ kubectl get po -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-7fcdff5f4c-p9cjq 1/1 Running 0 2m36s kubernetes-dashboard-85f59f8ff7-zhbsd 1/1 Running 0 2m36s3.在Master01节点上查看Dashboard服务 $ kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper ClusterIP 10.0.174.237 8000/TCP 2m52s kubernetes-dashboard NodePort 10.0.187.20 443:31846/TCP 2m52s4.在谷歌浏览器(Chrome)启动文件中加入启动参数,用于解决无法访问Dashboard的问题 (1)右键谷歌浏览器(Chrome),选择【属性】 (2)在【目标】位置处添加下面参数,这里再次强调一下--test-type --ignore-certificate-errors前面有参数 --test-type --ignore-certificate-errors 加入启动参数 加入启动参数5.在Master01节点上查看token值 $ kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}') Name: admin-user-token-98765 Namespace: kube-system Labels: Annotations: kubernetes.io/service-account.name: admin-user kubernetes.io/service-account.uid: 95a305e4-620d-4e0f-ab58-b50ee7cdabd6 Type: kubernetes.io/service-account-token Data ==== namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6Ik52bDFLMFZUNEZiN3gybFFfTWxfM0hORDUzRHdPcm1wM2s5VjBOZ3YtYU0ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTk4NzY1Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5NWEzMDVlNC02MjBkLTRlMGYtYWI1OC1iNTBlZTdjZGFiZDYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.LgPrPXX3kwVJUxk3HKARVjmxNZi5u5zwe8KUAg84OyZ_iknuUUKDo0drgHuj6lHIKcs4QAZVeK5ENplP7pFWV9KNTwPQzte7PdZFdbkyR-EppeWQwxPR_R_uYbMFXGCpUF9rOQQH2CzHSZLDMC_L66MqCyn973scLrbwnejZlg_r5vMj6e5t9b3qAKbD6jIqB-V0xtjUNllvxCg4YX7Z9q6tYntceA9AkMp1FMyVlxSeIh0VU7mLO97vClHAV0p3yI3EUwovKLrBN-LEE-Bb54QTG47G102nCJkYxVlC0MPprS6MkJL3LgxEBlhsjtBPyaBj73JtmbmGToWpD2nQ7Q6.打开谷歌浏览器(Chrome),输入https://任意节点IP:服务端口,这里以Master01节点为例(如果访问失败,可参考k8s排错-Google浏览器打不开k8s中dashboard) https://192.168.1.31:31846 测试 测试7.切换命名命名空间为kube-system,默认defult命名空间没有资源  登录dashboard-24.13 Kubectl自动补全 登录dashboard-24.13 Kubectl自动补全1.在Master01节点上开启kubectl自动补全 source > ~/.bashrc2.在Master01节点上为 kubectl 使用一个速记别名 $ alias k=kubectl $ complete -o default -F __start_kubectl k4.14 集群可用性验证1.在Master01节点上查看节点是否正常,确定都是Ready $ kubectl get node NAME STATUS ROLES AGE VERSION k8s-master01 Ready 105m v1.23.17 k8s-master02 Ready 105m v1.23.17 k8s-master03 Ready 105m v1.23.17 k8s-node01 Ready 105m v1.23.17 k8s-node02 Ready 105m v1.23.172.在Master01节点上查看所有Pod是否正常,确定READY都是N/N形式的且STATUS 都为Running $ kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-6f6595874c-lgc47 1/1 Running 0 83m kube-system calico-node-5pdrk 1/1 Running 0 83m kube-system calico-node-6zgnd 1/1 Running 0 83m kube-system calico-node-b4ktd 1/1 Running 0 83m kube-system calico-node-bkplf 1/1 Running 0 83m kube-system calico-node-rvnrq 1/1 Running 0 83m kube-system calico-typha-6b6cf8cbdf-llcms 1/1 Running 0 83m kube-system coredns-5db5696c7-9m9s8 1/1 Running 0 68m kube-system metrics-server-6bf7dcd649-v7gqt 1/1 Running 0 61m kubernetes-dashboard dashboard-metrics-scraper-7fcdff5f4c-p9cjq 1/1 Running 0 56m kubernetes-dashboard kubernetes-dashboard-85f59f8ff7-gxmp7 1/1 Running 0 22m3.在Master01节点上查看集群网段是否冲突 (1)在Master01节点上查看SVC网段 $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.0.0.1 443/TCP 155m(2)在Master01节点上查看POD网段,主要分为两段,一段是因为使用HostNetwork,所以使用宿主机网段;另一段使用POD网段 $ kubectl get po -A -owide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system calico-kube-controllers-6f6595874c-lgc47 1/1 Running 0 85m 172.27.14.193 k8s-node02 kube-system calico-node-5pdrk 1/1 Running 0 85m 192.168.1.35 k8s-node02 kube-system calico-node-6zgnd 1/1 Running 0 85m 192.168.1.32 k8s-master02 kube-system calico-node-b4ktd 1/1 Running 0 85m 192.168.1.33 k8s-master03 kube-system calico-node-bkplf 1/1 Running 0 85m 192.168.1.31 k8s-master01 kube-system calico-node-rvnrq 1/1 Running 0 85m 192.168.1.34 k8s-node01 kube-system calico-typha-6b6cf8cbdf-llcms 1/1 Running 0 85m 192.168.1.35 k8s-node02 kube-system coredns-5db5696c7-9m9s8 1/1 Running 0 69m 172.24.92.65 k8s-master02 kube-system metrics-server-6bf7dcd649-v7gqt 1/1 Running 0 63m 172.17.124.1 k8s-node01 kubernetes-dashboard dashboard-metrics-scraper-7fcdff5f4c-p9cjq 1/1 Running 0 58m 172.24.244.193 k8s-master01 kubernetes-dashboard kubernetes-dashboard-85f59f8ff7-gxmp7 1/1 Running 0 23m 172.18.194.1 k8s-master034.在Master01节点上查看是否正常创建资源 (1)在Master01节点上创建名为cluster-test的deployment $ kubectl create deploy cluster-test --image=registry.cn-hangzhou.aliyuncs.com/zq-demo/debug-tools -- sleep 3600(2)在Master01节点上查看deployment创建情况 $ kubectl kubectl get po NAME READY STATUS RESTARTS AGE cluster-test-79b978867f-4x2lw 1/1 Running 8 (19m ago) 8h5.在Master01节点上检查Pod 是否能够解析 Service (1)在Master01节点上解析kubernetes,观察到和上面SVC地址一致 $ kubectl exec -it cluster-test-79b978867f-4x2lw -- bash (23:59 cluster-test-79b978867f-4x2lw:/) nslookup kubernetes Server: 10.0.0.10 Address: 10.0.0.10#53 Name: kubernetes.default.svc.cluster.local Address: 10.0.0.1(2)在Master01节点上解析kube-dns.kube-system,观察到和上面SVC地址一致 $ kubectl exec -it cluster-test-79b978867f-4x2lw -- bash (23:59 cluster-test-79b978867f-4x2lw:/) nslookup kube-dns.kube-system Server: 10.0.0.10 Address: 10.0.0.10#53 Name: kube-dns.kube-system.svc.cluster.local Address: 10.0.0.106.每个节点是否能访问 Kubernetes 的 kubernetes svc 443 和 kube-dns 的 service 53 (1)在每台机器上测试访问 Kubernetes 的 kubernetes svc 443 $ curl https://10.0.0.1:443 curl: (60) Peer's Certificate issuer is not recognized. More details here: http://curl.haxx.se/docs/sslcerts.html curl performs SSL certificate verification by default, using a "bundle" of Certificate Authority (CA) public keys (CA certs). If the default bundle file isn't adequate, you can specify an alternate file using the --cacert option. If this HTTPS server uses a certificate signed by a CA represented in the bundle, the certificate verification probably failed due to a problem with the certificate (it might be expired, or the name might not match the domain name in the URL). If you'd like to turn off curl's verification of the certificate, use the -k (or --insecure) option.(2)在每台机器上测试访问 Kubernetes 的kube-dns 的 service 53 $ curl 10.0.0.10:53 curl: (52) Empty reply from server7.Pod 和机器之间是否能正常通讯 (1)在Master01节点上查看pod节点IP $ kubectl get po -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES cluster-test-79b978867f-4x2lw 1/1 Running 8 (23m ago) 8h 172.24.92.67 k8s-master02(2)在Master01节点上ping测试 $ ping -c 2 172.24.92.67 PING 172.24.92.67 (172.24.92.67) 56(84) bytes of data. 64 bytes from 172.24.92.67: icmp_seq=1 ttl=63 time=0.401 ms 64 bytes from 172.24.92.67: icmp_seq=2 ttl=63 time=0.188 ms --- 172.24.92.67 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1031ms rtt min/avg/max/mdev = 0.188/0.294/0.401/0.107 ms8.检查Pod 和Pod之间是否能正常通讯 (1)在Master01节点上查看default默认命名空间下的Pod $ kubectl get po -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES cluster-test-79b978867f-4x2lw 1/1 Running 8 (23m ago) 8h 172.24.92.67 k8s-master02(2)在Master01节点上kube-system命名空间下的Pod $ kubectl get po -n kube-system -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-6f6595874c-lgc47 1/1 Running 0 9h 172.27.14.193 k8s-node02 calico-node-5pdrk 1/1 Running 0 9h 192.168.1.35 k8s-node02 calico-node-6zgnd 1/1 Running 0 9h 192.168.1.32 k8s-master02 calico-node-b4ktd 1/1 Running 0 9h 192.168.1.33 k8s-master03 calico-node-bkplf 1/1 Running 0 9h 192.168.1.31 k8s-master01 calico-node-rvnrq 1/1 Running 0 9h 192.168.1.34 k8s-node01 calico-typha-6b6cf8cbdf-llcms 1/1 Running 0 9h 192.168.1.35 k8s-node02 coredns-5db5696c7-9m9s8 1/1 Running 0 9h 172.24.92.65 k8s-master02 metrics-server-6bf7dcd649-v7gqt 1/1 Running 0 9h 172.17.124.1 k8s-node01(3)在Master01节点上进入cluster-test-79b978867f-429xg进行ping测试 $ kubectl exec -it cluster-test-79b978867f-4x2lw -- bash (00:06 cluster-test-79b978867f-4x2lw:/) ping -c 2 192.168.1.33 PING 192.168.1.33 (192.168.1.33) 56(84) bytes of data. 64 bytes from 192.168.1.33: icmp_seq=1 ttl=63 time=0.575 ms 64 bytes from 192.168.1.33: icmp_seq=2 ttl=63 time=0.182 ms --- 192.168.1.33 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1006ms rtt min/avg/max/mdev = 0.182/0.378/0.575/0.197 ms |

【本文地址】

今日新闻 |

推荐新闻 |